Message boards :

News :

BHDB application

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · 7 · 8 · Next

| Author | Message |

|---|---|

|

Send message Joined: 9 Nov 17 Posts: 21 Credit: 563,207,000 RAC: 0  |

Alessio Susi wrote: I have 33 task completed but I can't upload the results. What's the problem?I uploaded about 2000 tasks during the last few days. It went slow and I had quite a few retries, but it generally worked for me. I have client versions 7.8.1, .3 and .4, each one on Linux. I did not alter any http related cc_config.xml items, nor do I use a proxy. Jim1348 wrote: xii5ku wrote:The _5 tasks are affected the same way, it's just that I had a lot more _9ers than _5ers.However, I have a nasty problem with the _9 series tasks from Sunday:I wonder how that compares with the _5 (or other) tasks. The reason may simply be that the _9 are larger now. But if they were divided into smaller pieces, then the upload amount would still be the same in total. It could be just that the earlier ones failed more often that you did not see the problem earlier. I didn't watch the other batches closely. mmonnin wrote: xii5ku wrote:Hmm, you are right. 4th error was reported March 12, 2:50:33 UTC, and 5th error at 3:19:48 UTC, yet there was still a new task sent at 3:20:01 UTC.mmonnin wrote:if only the server actually stopped sending them out after 4 errors.they should have been aborted on the server side. I aborted quite a few others that were not batch 9.These WUs are cancelled after 4 or more clients return a task of the respective WU with an error. (Would be good if Krzysztof cancelled them earlier.) Am I interpreting the line "max # of error/total/success tasks | 4, 10, 2" incorrectly? 6 hours of processor time wasted on that one alone... :-( |

|

Send message Joined: 29 Jan 18 Posts: 6 Credit: 523,333 RAC: 0  |

This seems hopeless. I've had 143 files waiting for transfer for last FOUR days. They are _5 and _9. Should I keep trying or just delete them? |

Krzysztof Piszczek - wspieram ... Krzysztof Piszczek - wspieram ...Send message Joined: 4 Feb 15 Posts: 849 Credit: 144,180,465 RAC: 0  |

It looks like is that from your network. My all tasks sent correctly. Also nobody else report this problem... Krzysztof 'krzyszp' Piszczek Member of Radioactive@Home team My Patreon profile Universe@Home on YT |

|

Send message Joined: 21 Feb 15 Posts: 64 Credit: 65,733,511 RAC: 0  |

Up / Download works here. New batch of work started, first few finished without errors. |

Conan ConanSend message Joined: 4 Feb 15 Posts: 49 Credit: 15,956,546 RAC: 0  |

@ Chris, You could try to stop BOINC and then re-start it. I have had this work for me on a number of occassions but it is only a suggestion. I have had no work for awhile and just got some today, but have had no upload or download issues recently. Your last contact was on the 13th of March, hitting update should contact the server, if you have been doing this then there is an issue at your end as it now has been 3 days since your last contact. Is your anti-virus being picky and blocking you? Conan |

|

Send message Joined: 28 Nov 17 Posts: 4 Credit: 35,241,140 RAC: 0  |

It seem like we're getting _10 WU:s now! So far I've done a few dozen and no errors but none verified yet either. Great to see we're back in business again! |

|

Send message Joined: 29 Jan 18 Posts: 6 Credit: 523,333 RAC: 0  |

It looks like is that from your network. My all tasks sent correctly. Also nobody else report this problem... my laptop work on Einstein, Milky Way and LHC alongside Universe@home projects. All other transfers go through with absolutely no problem. Event log: 16/03/2018 10:20:16 | | Internet access OK - project servers may be temporarily down. Update: Is your anti-virus being picky and blocking you? thanks for the tip. It looks like that was it. Suddenly everything is going smoothly :) |

|

Send message Joined: 1 Apr 15 Posts: 49 Credit: 30,557,740 RAC: 0  |

It looks like is that from your network. My all tasks sent correctly. Also nobody else report this problem... Also my PC can't upload the _5 and _9 results. I have about 40 WUs to upload from 3 days. I canceled all the remaining WUs to not lose other hours of work. ASUS X570 E-Gaming AMD Ryzen 9 3950X, 16 core / 32 thread 4.4 GHz AMD Radeon Sapphire RX 480 4GB Nitro+ Nvidia GTX 1080 Ti Gaming X Trio 4x16 GB Corsair Vengeance RGB 3466 MHz

|

|

Send message Joined: 4 Nov 16 Posts: 20 Credit: 118,453,585 RAC: 0  |

It seem like we're getting _10 WU:s now! So far I've done a few dozen and no errors but none verified yet either. Great to see we're back in business again! Yes, I do agree! I've done 134 so far, of which 65 are valid, none invalid, and only one single Comp.Err. Keep on sending out more WU's - we're happy to crunch 'em all weekend! :-))) //Gunnar |

Krzysztof Piszczek - wspieram ... Krzysztof Piszczek - wspieram ...Send message Joined: 4 Feb 15 Posts: 849 Credit: 144,180,465 RAC: 0  |

I will setup batch 11 tonight then I will prepare (probably tomorrow) few millions of BHspin's :) Krzysztof 'krzyszp' Piszczek Member of Radioactive@Home team My Patreon profile Universe@Home on YT |

|

Send message Joined: 21 Feb 15 Posts: 64 Credit: 65,733,511 RAC: 0  |

I got some wu's on my android; they failed. As an example look here: https://universeathome.pl/universe/result.php?resultid=33673986 |

Krzysztof Piszczek - wspieram ... Krzysztof Piszczek - wspieram ...Send message Joined: 4 Feb 15 Posts: 849 Credit: 144,180,465 RAC: 0  |

I got some wu's on my android; they failed. This BHSpin, not BHDB. You probably have deleted previously downloaded application. Project reset should help. Krzysztof 'krzyszp' Piszczek Member of Radioactive@Home team My Patreon profile Universe@Home on YT |

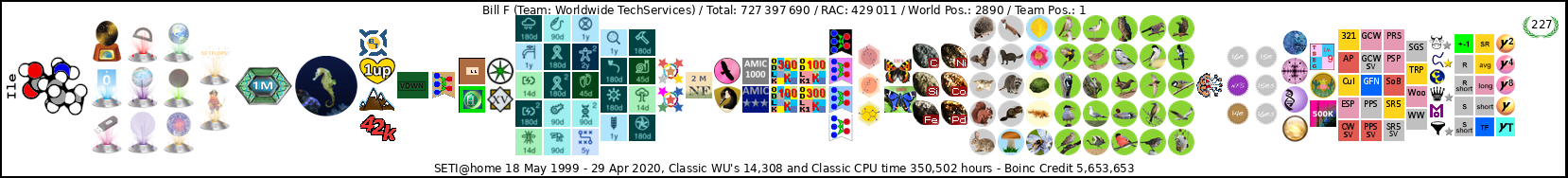

Bill F Bill FSend message Joined: 23 Jun 16 Posts: 63 Credit: 7,001,632 RAC: 0  |

Thank you for the BHspin's Bill F In October 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

|

Send message Joined: 10 Jun 16 Posts: 1 Credit: 2,481,500 RAC: 0  |

in the last hours 7 wu ended with computation error and the almost usual message "EXIT_DISK_LIMIT_EXCEEDED". Disk limit is currently set at 64 Gb and at least 50 Gb are free and available. If interested, the failed wu are: https://universeathome.pl/universe/workunit.php?wuid=14684530 https://universeathome.pl/universe/workunit.php?wuid=14684344 https://universeathome.pl/universe/workunit.php?wuid=14684402 https://universeathome.pl/universe/workunit.php?wuid=14684397 https://universeathome.pl/universe/workunit.php?wuid=14684345 https://universeathome.pl/universe/workunit.php?wuid=14684353 https://universeathome.pl/universe/workunit.php?wuid=14684392 |

|

Send message Joined: 2 Jun 16 Posts: 169 Credit: 317,253,046 RAC: 0  |

Why are batch 3 and 5s being sent out again. Pls stop sending us those! |

|

Send message Joined: 4 Nov 16 Posts: 20 Credit: 118,453,585 RAC: 0  |

Why are batch 3 and 5s being sent out again. Pls stop sending us those! I just found this in the log on one of my dozen computers: Aborting task universe_bhdb_180109_5_25449975_20000_1-999999_450200_5: exceeded disk limit: 890.09MB > 858.31MB Aborting task universe_bhdb_180109_5_25734975_20000_1-999999_735200_6: exceeded disk limit: 896.42MB > 858.31MB Aborting task universe_bhdb_180109_5_25429975_20000_1-999999_430200_4: exceeded disk limit: 900.92MB > 858.31MB Aborting task universe_bhdb_180109_5_55024945_20000_1-999999_25200_2: exceeded disk limit: 899.32MB > 858.31MB Aborting task universe_bhdb_180109_5_55364945_20000_1-999999_365200_3: exceeded disk limit: 936.15MB > 858.31MB In all I have around 60 erroneous tasks since yesterday. :-( It seems like the disk limits still are too low. Please increase them a bit before sending out next batch! Have a nice weekend!! //Gunnar |

Chooka ChookaSend message Joined: 30 Jun 16 Posts: 42 Credit: 309,815,029 RAC: 0  |

I've had 112 errors for the Black Hole Database v0.03. WU 14599915 for example. Shame its at the end of hrs of crunching.

|

|

Send message Joined: 9 Feb 18 Posts: 1 Credit: 288,000 RAC: 0  |

I've been having the "exceeded disk limit" problems as well, so I decided to look a little deeper into what the application is actually doing while it is being run. You probably already know this , but I was surprised by the fact that the app seems to be writing duplicate copies of several results files, including one set of files that can easily become 800-900MB+ (data1.dat2 and data1.dat3). Example of duplicate files (in the "slots" directory of a BHDB3 task in progress): # sha256sum -b data*.dat* 9e4f9639efb55652dd12704758fca1e5ff84f36b1f053fd7deefdc75cd3f9fb9 *data0.dat 13fa73e139c7516e11c061fba4ce5120ee5a89e48d9f311de770aa52ee4a3d80 *data0.dat2 13fa73e139c7516e11c061fba4ce5120ee5a89e48d9f311de770aa52ee4a3d80 *data0.dat3 ee8ebfbc970f3b22336e87fc05c83166d795a7707a54bbb6d39774a89c12e5ec *data1.dat d0af02bb3d08ae0042ce3854794bcf5f6592775cb9d9fe702c32c5ca5fa62a7f *data1.dat2 d0af02bb3d08ae0042ce3854794bcf5f6592775cb9d9fe702c32c5ca5fa62a7f *data1.dat3 cbf374f3985ddaa108b17a4f3dc7d69678f6616a64f04d7424886c2d2cf81911 *data2.dat 6c1d6146828c22d3038ee65542e9d50fb5d5ce3b2f9ad33a87cd77cbb8cdba3b *data2.dat2 6c1d6146828c22d3038ee65542e9d50fb5d5ce3b2f9ad33a87cd77cbb8cdba3b *data2.dat3 # ls -1sh data*.dat* 4.0K data0.dat 1.6M data0.dat2 1.6M data0.dat3 364K data1.dat 362M data1.dat2 362M data1.dat3 4.0K data2.dat 1.5M data2.dat2 1.5M data2.dat3 I don't want to be rude, but is it really necessary to write these duplicate *.dat3 files? If these duplicates are not really needed, I think it should be fairly simple to prevent the failure a lot of the WU's by fixing the code to not generate these big duplicate files.

|

|

Send message Joined: 26 Oct 17 Posts: 2 Credit: 27,648,732 RAC: 0  |

I have received only 7 tasks on 24th of March and zero since.... From these, all B Hole tasks error-ed , BH Spin completed. Is project out of workunits? What are the plans? |

|

Send message Joined: 9 Jan 17 Posts: 1 Credit: 1,472,967 RAC: 0  |

I have also had WU waiting to uplaod. I started out with well in excess of 50 and now that we are out of time some of them are uploading to you I currently have 7 WU that will not uplaod, similar problem with NumberFields@Home, currently 6 WU and growing. |